Previously I’ve noted the similarities between computer programming and writing fiction, saying both attracted their own practitioners. I then explained why I view computer programming, as well as computer programs, as forms of art.

How else is writing fiction like computer programming? Practicing the two, one common aspect I’ve gleaned is their repetitive natures. Both are iterative processes.

I cannot in good faith declare any fiction I’ve written “done” until I’ve read the final draft from start to finish eight or more times. (Usually the number is higher.) With each read, sentences are moved or removed, paragraphs rearranged, punctuation revised, word choices are evaluated, and so on. Shaping prose is one of the most important skills a writer can cultivate. (Journalists do this in their sleep. Minutes after the final out, San Francisco Chronicle baseball writer Susan Slusser files a game summary that is polished, informative, and to the point.)

In fiction, editing is usually described as fine-tuning a manuscript, but more often it’s about being bold—knowing when to strike a paragraph, a page, or even a chapter, all in the service of a better story.

As any computer programmer can tell you, this is a familiar process. Programmers probably spend more time at the keyboard revising existing code than writing new code. Small program edits—similar to line edits or word choice—are common enough, but when more major surgery is performed, programmers will often use a special word: refactoring. Refactoring is restructuring existing code without changing its external behavior. (It’s usually done to make the code easier to read and maintain, not to add a new feature or fix a bug.)

That’s the crux: Without changing existing behavior. It’s funny, in writing fiction, if you make a lot of bold changes, it’s considered a success if the story seems “new” or “better” to a reader. In writing code, success is if you make a bold refactoring and the program operates exactly as it worked before.

The Ouroboros

I enjoy reading how other authors developed their fiction. Authors selected for Best American Short Stories (and other volumes in the Best American series) are given the opportunity to write a capsule for the books’ back matter. They often discuss inspiration for the story, and how external factors shaped its outcome. Writers’ correspondence is another goldmine for learning creative processes. (In particular I recommend Raymond Chandler’s Selected Letters, which is a master class in writing, style, and technique.)

Often when an author discusses how they developed a story, I’ve noted they can’t pin down the exact moment of inspiration. There might be some flash where the creative process launches, but so many times writers confess how stories come from a nagging itch to write on a subject or develop some character trait. Long-forgotten inspiration will come roaring back to life for some reason. Writers some times talk about stories as though they “demanded” to be written.

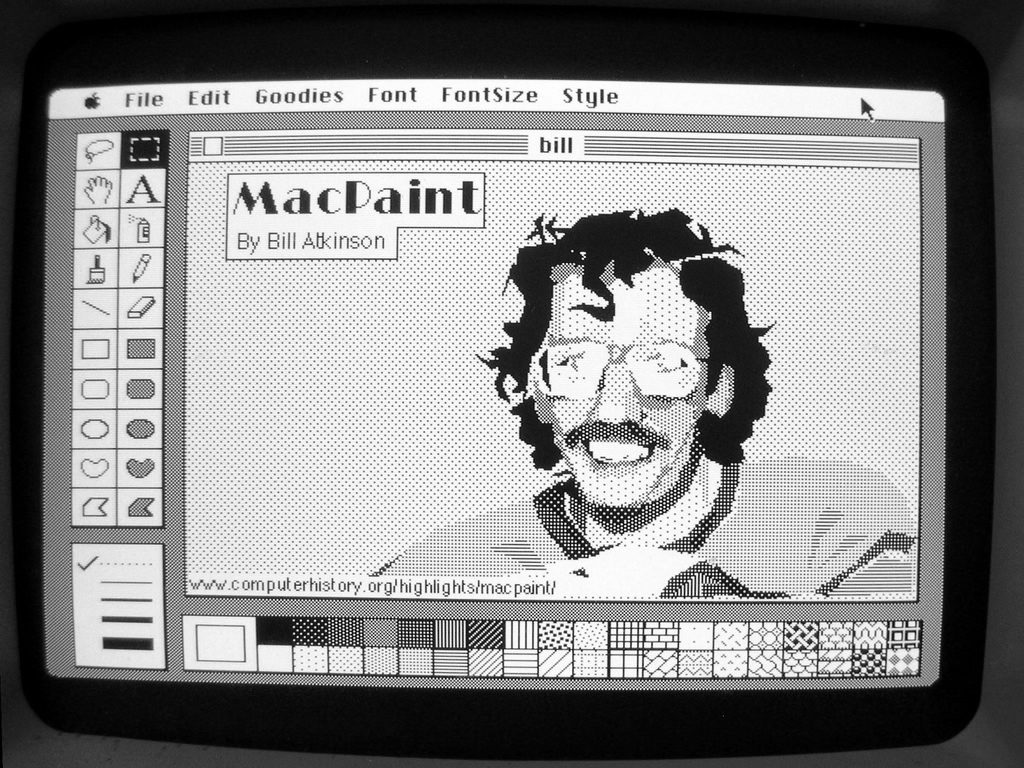

Programmers have similar stories, although the inspiration may not be as abstract as, say, a line of dialogue or a character detail. Usually it’s a need driving the creation of new software, needs like “I wish there was a web site for me to connect to all my friends” (social networking) or “I wish I had a typewriter where it was easy to correct mistakes, and it would even check my spelling for me” (word processor).

Many times I’ve read of authors returning to old work and fighting (or succumbing to) the urge to edit it. The edits may only be a comma here, a semicolon there. They may be larger edits , striking paragraphs or scenes in the pursuit of a tighter tale. Programmers deal with this urge too, always looking to tighten up code and make it more efficient or elegant.

I’ve quoted this elsewhere, but it’s worth repeating:

…software development is an iterative and incremental process. Each stage of the process is revisited repeatedly during the development, and each visit refines the end product of that stage. In general, the process has no beginning and no end. [Italics mine.]

That was written by Bjarne Stroustrup, the inventor of the C++ programming language. Everything in this quote pertains to writing fiction as much as it pertains to writing code.

When I edit stories, I visit and revisit the story as part of the editing process, to smooth and refine the language, to ensure the story flows smoothly. Programming has a similar process, a continual revisiting and revision of the code to remove flab and tighten up its execution.

That’s what Stroustrup meant when he said the process has no beginning and no end. Stories and computer programs are never finished. They can always be made a bit better.

Sometimes alteration worsens the final product. When coding, I often talk serious changes as “surgery.” While it might be necessary, it’s possible to hurt the program while improving it. Touching code in one place can break code in another place. This is why sometimes you’ll download an update to an app and it seems slower or simply broken, even though the developer swears they’ve made improvements.

Likewise, fussing over a novel or a story can hurt it too. In the original editions of The Martian Chronicles, the chapters were dated like a diary, starting from 1999 and ending in 2026. Today, revised editions use dates from 2030 to 2057. A small change, undoubtedly made to preserve the story being told “in the future,” but it stole away some of the book’s charm. In my youth, 1999 was a magical date, a momentous odometer signaling a shift to the bold 21st century. 2030 is just another number.

A common adage among software developers is “Don’t fix what’s not broken.” The same can be said for fiction.

Distillation

Programmer Ben Sandovsky observes:

Treat yourself [the programmer] as a writer and approach each commit as a chapter in a book. Writers don’t publish first drafts. Michael Crichton said, “Great books aren’t written– they’re rewritten.”

Sandovsky is exhorting computer programmers not to make hasty changes to a computer program, but to edit and revise those changes before officially adding them to the program.

Late in the editing process, I’ll often read my stories aloud to make sure they flow well. I’ve never read my code aloud—computer languages aren’t like human languages, for the most part—but I’ve certainly eyeballed my code closely, going over it line-by-line, before committing it.

I often use the word distill for both pursuits—to purify, condense, and strengthen through repetitive processes. Writers and coders don’t simply edit their work, they distill it down to its essence.

Lazy writing makes for boring reading. Lazy programming makes for buggy software. In general the process has no beginning and no end. The art is knowing when to let go and release your hard work to the world.